AI Language Models: A Brief History

Feb 24, 2025

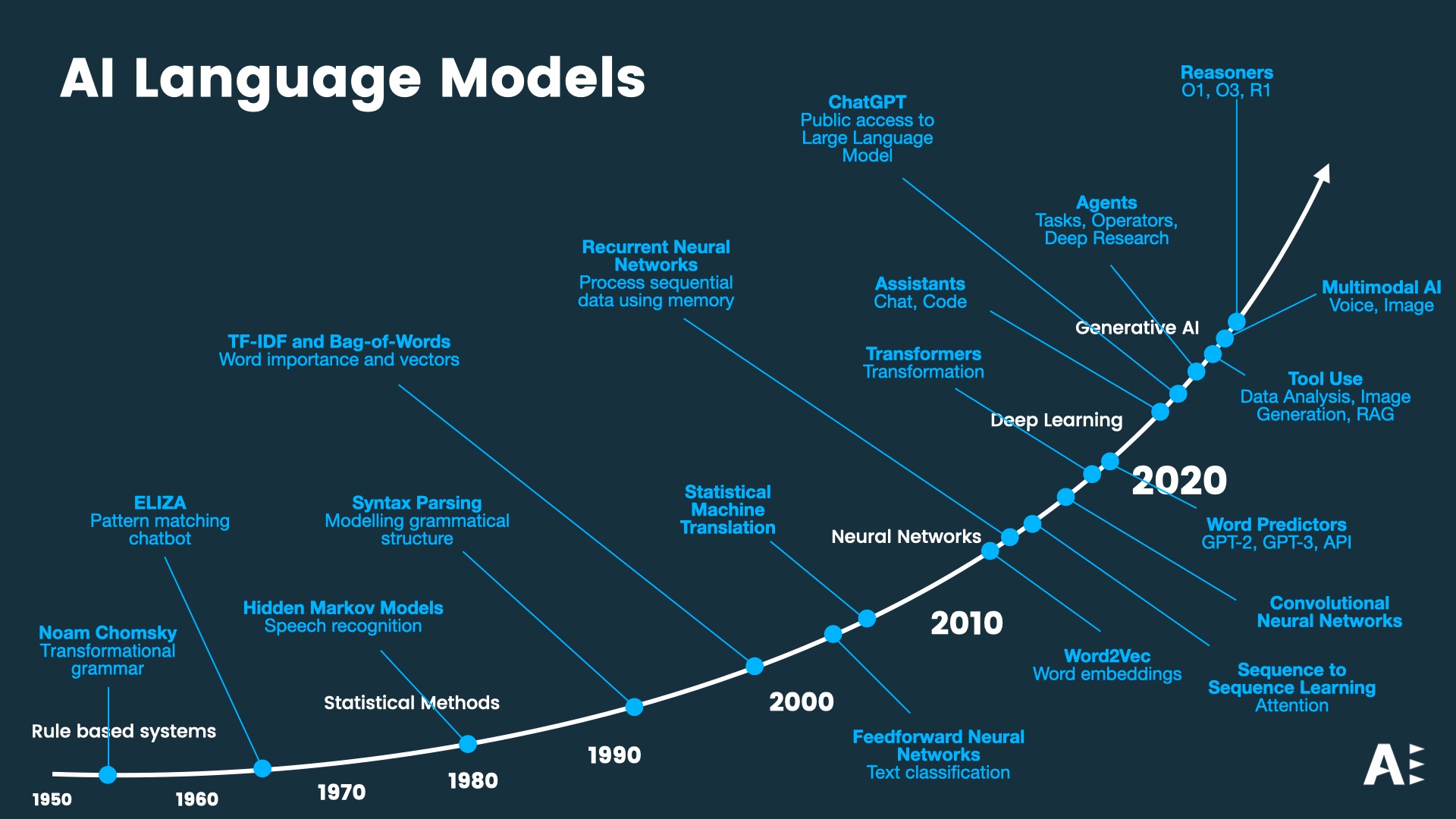

For decades, AI language models were considered a niche application within the broader field of artificial intelligence. Early AI research focused on logic, expert systems, and robotics, while natural language processing (NLP) remained a secondary concern. However, in recent years, language models have emerged as the defining application of AI, reshaping industries and even society itself. This blog explores how AI language models evolved from obscure research projects to general-purpose intelligence engines, driving some of the most staggering advancements in AI today.

The Early Days: Language Processing as a Side Quest

In the mid-20th century, the first computational models of language were heavily influenced by linguistics. Noam Chomsky's Transformational Grammar (1957) laid the theoretical foundation for syntactic parsing, inspiring early rule-based NLP models.

By 1966, MIT’s ELIZA—a simple pattern-matching chatbot—demonstrated the potential for human-computer conversation, albeit in a very constrained way. Later, in the 1970s and 1980s, Hidden Markov Models enabled early speech recognition, but these systems remained brittle and domain-specific.

Throughout the 1990s and early 2000s, techniques like TF-IDF, Bag-of-Words, and Statistical Machine Translation powered search engines and translation tools, but they lacked true understanding. AI’s most exciting advances were happening elsewhere, in areas like computer vision and game-playing AI.

The Deep Learning Revolution: Language Gets Neural

The paradigm shifted dramatically in the 2010s, as neural networks began to outperform traditional methods.

- 2013: Word2Vec introduced word embeddings, allowing models to understand semantic relationships between words.

- 2014-2017: Recurrent Neural Networks (RNNs) and Attention Mechanisms led to breakthroughs in translation (Sequence-to-Sequence Learning) and text classification.

- 2017: The Transformer Architecture (from Attention Is All You Need) replaced RNNs, enabling more powerful models capable of processing entire sentences in parallel.

This set the stage for scaling—the defining force behind modern AI. Researchers discovered that simply increasing the size of models, training data, and compute resources led to surprising emergent abilities.

The Expansion of Large Language Models (LLMs)

The Transformer architecture paved the way for **GPT-2 (2019)** and **GPT-3 (2020)**, models capable of generating surprisingly coherent text. At this stage, LLMs were still seen as impressive but fundamentally flawed—prone to hallucinations and lacking real-world utility beyond chatbots and content generation.

Then AI models began integrating with external tools.

- 2022-2023: AI Assistants & Tool Users – With ChatGPT, AI gained widespread adoption, evolving from text generation to coding, data analysis, and multimodal interactions (voice, image processing).

- 2023-2024: Multimodal AI – Models like GPT-4V integrated vision capabilities, expanding their usefulness beyond text.

- 2024-2025: AI Agents & Advanced Reasoners – The latest iterations are becoming autonomous agentscapable of executing complex tasks, using external tools, conducting deep research, and even exhibiting emergent reasoning abilities.

Scaling, Reinforcement Learning, and the Path Forward

While reinforcement learning techniques such as Reinforcement Learning with Human Feedback (RLHF) have improved AI’s alignment with human intentions, recent developments suggest that further breakthroughs will come from enhanced architectures and better integration with reasoning frameworks.

Now, AI research is moving toward stronger reasoning models, more reliable tool use, and greater adaptability in real-world applications. The next steps?

- Stronger reasoning frameworks (O1, O3, R1 models).

- Better integration with real-world applications (AI-powered research, automation, and assistants).

- Potential movement toward Artificial General Intelligence (AGI).

LLMs as the Main AI Application

AI language models have gone from being a niche curiosity to the most dominant force in AI development. Their versatility, scalability, and ability to integrate with external tools have made them the defining AI application of the modern era. As we look ahead, the question is no longer whether LLMs will power the future of AI—it’s how far they can take us.

The next few years will determine whether these models evolve into truly general intelligence or remain powerful, but ultimately limited, assistants. Either way, the transformation has been staggering, and AI's language revolution is far from over.

Do you want to learn more about AI and how you can leverage it right now? Join the AI revolution by taking our AI Accelerator today.

Get your AI edge

Join the Artificial Intelligence revolution now by subscribing to our newsletter. Access the latest insight, tools, techniques and use cases for AI to give you an edge.

We hate SPAM. We will never sell your information, for any reason.